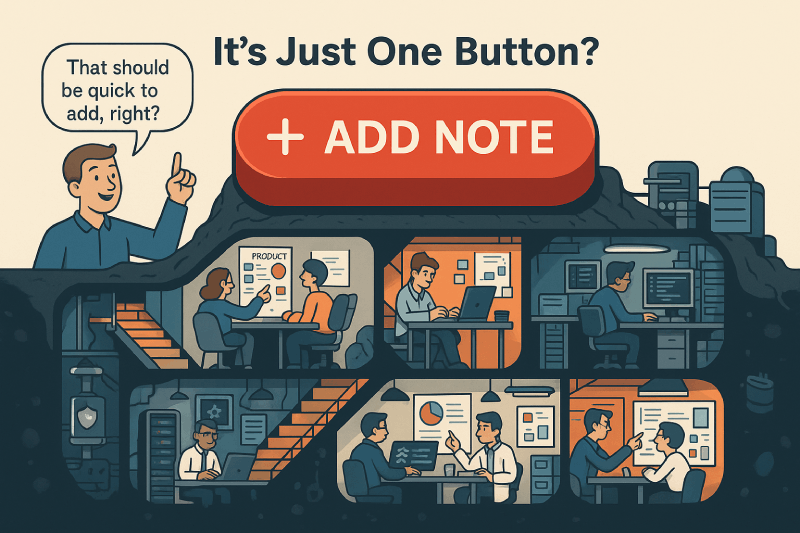

That should be a quick thing to add, right?

– Lots of customers, including me three years ago

I was inspired to write this after reading Matthew Weir’s post on Reddit. Matt did an excellent AMA (well worth reading) in the MSP subreddit, and something he said resonated really deeply with me:

I say “real software company” because I see a lot of MSPs get confused in the idea that their techs that have written code to automate stuff in their MSP or for their clients are “developers”– this couldn’t be further from the truth. I believe it to be a disservice to our MSP clients to sell these sorts of services from our misconception of what real dev looks like.

- Matt Weir (Fulcrum Insights)

As someone who has experience on both sides of the fence here (I am currently a Director of Product Management at NinjaOne and before this I was in an MSP for 15 years), I wanted to dig in more into what these differences are, and give you a peek behind the curtain on why when software development is done properly it’s not as easy, or as quick, as most people think to just add a simple feature request. Let’s pick a pretty simple use case, and one based on reality as the example.

Adding multiple notes against a device

At its surface, this sounds like a simple request, right? Add a UI element and a new column to the database and done in an afternoon! This couldn’t be further from the truth.

It starts with good research and validation

Before we even delve into starting to build a feature, as a product manager we are doing research on the problem itself (and validating it in the process before we even start to consider requirements). This involves interviewing clients (of different sizes and types), reading and collating insights submitted as feature requests, soliciting feedback and thoughts from internal stakeholders like support, sales engineers. Depending on the feature we would pull quantitative data and assess that. We would build personas by looking at things like, who is likely to want to add notes against a Device? All this is done to validate understanding of what the core problem is and what opportunities are to available fix this.

Once we understand the user pain, we translate that understanding into defined requirements detailing how exactly we can solve that pain.

Building a feature into a product that does not solve the core of a user’s problem is the second worst thing you can do as a product manager. The first worst thing is using the word ‘Synergy’ in a feature description

This is not too dissimilar a process from what is used in good IT problem resolution. The “Why, Why, Why”. Getting to the root cause of the problem is critical to make sure you’re not dealing with the symptoms, but actually building a feature that solves the root cause of the pain. I think about this as the “Enders Game” approach based on the book/movie because Ender (a character in the book) won not by just reacting tactically to the enemy ships he saw, but by relentlessly digging deeper to understand the fundamental nature and motivation of the Formics (the enemy) – the why behind their actions – allowing him to find the single action that solved the entire problem at its root.

Important note: Gavsto does not encourage wiping out all end users or customers even though that’s how Ender solved his problem

Problems become requirements

Now we understand the root problem, we build the product requirements up. That initially involves coming up with the solution that resolves the underlying problem. Text box that can submit? Just add a column right? Wrong! As a product manager approaching this, I am considering things like:

- What is the XSS (Cross-Site Scripting), or similar, impact of this; we need to get this independently assessed by our security team

- What characters do I need to support in the text? As a multi-region, multi-language product we need to make sure that those special characters not present in US/UK English work properly

- What actions do I need to take against this note. Delete? Edit?

- What information is important to see about the note. The user that added it? The date it was added?

- How do I sort the notes. Newest first? Oldest First?

- How many notes should we allow to be created against a single device?

- How long should a note be allowed to be as a single entity. How many characters? Do I need to display to this user?

- Where does the note go on the device screen?

- Do we allow it against all Device types?

- How could we group notes here to get a good experience for our users?

- What permissions need to be present for a user to add a note, or even see a note!?

- How do we audit creation, edit and deletion of notes?

- Should it be rich text or just standard text?

- How does the user Save? Auto-save? Button? What feedback, if any, do they get when the note is added? What if the note addition errors or fails?

- Do I need to add anything into the API?

- Do I need to add this into the Device Search?

- How would performance be in the Device Search with notes maxed out?

- What operators do I need for filtering based on note?

- How do we display 4000 characters in a column?

I honestly could go on - but I want to delve into some of these specific points to highlight their importance, and also their difficulty.

Dates, Dates, Dates

I learned the importance of date formats and localization when I built a VBS script (this was 15 years ago, don’t shame me) that checked for successful backup dates. Worked fine…. then we got our first American client. That dd/mm/yyyy parsing I was doing worked really well until there was a 13 in the mm position. Then the entire thing crashed. I learned two things that day; 2010 Gavsto was a bad coder and also that ISO 8601 was a thing (the best date format ;)). When you account for regional differences not just in how different people and countries format the structure of their dates but also how they would prefer from a localization perspective to see those dates (ISO 8601, dots instead of slashes, leading zeroes, translations in month names etc) then dates get really complicated.

Then there’s time zones. Oh god time zones. This is a real monster. In our scenario, if Tech A adds a note in London at 2:15pm BST, and Tech B views it in New York, should they see 2:15pm? What about daylight savings time? Did you know that not all countries make their daylight saving changes at the same time? This introduces fantastic challenges with filters where you get challenges in making filters like “Last 24 hours” work in a standardised way cross-country. Anyway, lots of consideration for dates. They are a perfect microcosm of why robust software development considers the bigger picture beyond the developer’s own machine and location.

Validation + Limits and QA; the Chaos Engineers of SAAS

Getting validation and limits right at the start is critical. How many notes do you allow per device? How many characters can be added in per note? When you’re looking after as many devices as NinjaOne does, something like that can potentially run into many terabytes territory for just text, which is crazy, but that’s the scale we have to think about when building. The entire organization is constantly thinking about scalability. If it’s not loading lightning fast on 100k devices plus, it’s broken as far as I am concerned.

Here’s the other thing. If you’ve never heard of a QA (Quality Assurance) Engineer, they are people hired specifically to break you and your feature’s code; and I LOVE THEM. That’s why having appropriate validation and limits in place initially at least reduces the scope of things they can break, and they will break things. It’s their mission in life.

We don’t break things. We aggressively validate their fragility.

- NinjaOne QA Engineers

Design: More than just making it pretty

Now we have the requirements, we need to get a design. This is another area where it’s really easy to articulate the difference in time spent between a comprehensive approach and a more surface level one.

When professional software teams talk about “design,” they mean the whole User Experience (UX) and User Interface (UI). It’s not just about looks; it’s about usability, efficiency, and accessibility. Here’s how approaches can differ when considering effort and time:

| Feature Dimension | ✅ Comprehensive Approach | ❌ Surface-Level Approach |

|---|---|---|

| 🎯 Focus | User-Centric: Based on research (who users are, tasks, goals). Designs solve their problems. | Individual Centric: Based on assumptions or easiest code path. |

| 🗺️ Structure | Intuitive: Logical info layout, clear navigation. Easy to find things. | Haphazard: Confusing structure, hard-to-find features. |

| ✨ Interaction | Efficient: Smooth workflows, clear feedback (loading, success, errors), prevents mistakes. | Clunky: Awkward steps, minimal feedback, easy to mess up. |

| 🎨 Visuals/Style | Consistent: Uses Design System, professional look, readable, clean. Builds trust. Supports dark mode (Soon™) | Inconsistent: Mixed styles, cluttered, looks unprofessional. |

| ♿ Accessibility | Inclusive: Built for WCAG (keyboard, screen reader, contrast). Often legally required. | Ignored: Unusable for people with disabilities. |

| Responsiveness | Adaptive: Works well on various screen sizes & browsers. | Rigid: Breaks or looks bad on different screens/browsers. |

| 🌍 Global Design | World-Ready: Handles text length changes, cultural nuances. | Breaks: Layout fails with translations |

| 🧪 Process | Iterative: Uses prototypes, real user testing, refines based on feedback before coding. | Direct-to-Code: Skips testing, assumes usability, costly rework later. |

Designers work with Product Managers and Engineering Teams all through the development lifecycle. At Ninja, this process sees multiple rounds of multiple designers, product managers and even executive leadership reviewing the design and user experience.

Off to see the wizards (Engineering; Building Assets vs Creating Liabilities)

We’ve got the stories, we’ve written the high-level acceptance criteria - now we need to consider how to implement it. Up to now we’ve not really even talked about implementation details and this is intentional. Good product requirements are about the WHAT and the WHY, and are largely free of implementation details (HOW).

In my experience, how software is coded and managed by engineers in a professional setting compared to a quick hack or an internal script. It’s about building for the long term – focusing on reliability, security, maintainability, and scalability.

| Engineering Aspect | ✅ Comprehensive Approach | ❌ Surface-Level Approach |

|---|---|---|

| 🏗️ Code Quality | Readable & Maintainable: Clean, well-commented, consistent style (linters enforced). | Write-Only: Messy, inconsistent, hard for anyone else (or future self) to read. |

| 🏛️ Architecture | Scalable & Resilient: Uses design patterns, considers future growth, performance under load. | Brittle & Rigid: Often monolithic (“Big Ball of Mud”), ignores scale, hard to change. |

| 🧪 Testing | Rigorous & Automated: High coverage (Unit, Integration, E2E tests) run automatically (CI). | Minimal & Manual: “Works on my machine,” maybe some manual clicks, easily breaks. |

| 🔒 Security | Built-In: Follows secure practices, validates input, encodes output, scans dependencies. | Afterthought: Could be vulnerable (XSS, SQLi), may use outdated/insecure libraries. |

| ⚠️ Errors & Logging | Robust: Handles errors gracefully, provides clear diagnostic logs for troubleshooting. | Fragile: Crashes easily, vague errors (if any), minimal/useless logging. |

| 💾 Data Handling | Safe & Performant: Uses ORMs or safe queries (no SQLi), plans DB migrations, uses indexes, considers caching. | Risky: Prone to SQL injection, manual unplanned DB changes, ignores performance. |

| 🌿 Version Control (Git) | Disciplined: Clear commit history, branching strategy, mandatory Code Reviews. | Chaotic: Vague/huge commits, messy branches (or none), no code reviews. |

| 🧩 Dependencies | Managed: Carefully selected libraries or written in-house, regularly updated, vulnerability scanned. | Unmanaged: Random libraries added, rarely updated, security risks ignored. |

| 🔌 APIs | Stable & Documented: Clear contracts, versioned, documented (e.g., OpenAPI/Swagger). | Ad-hoc & Brittle: Undocumented endpoints, breaks consumers frequently. |

| 🚀 Deployment | Automated & Monitored: CI/CD pipeline, Infrastructure as Code, monitoring, alerting, rollback plans. | Manual & Risky: e.g., Manual file upload, “hope deployment,” no monitoring/rollback. |

| 🛠️ Maintainability | Sustainable: Easy for the team to understand, modify, and extend safely over time. | Technical Debt: Fragile, complex, scary to change without breaking things. |

The bottom line here is there are a LOT that professional engineering teams consider when they are building features that often get ignored outside of professional development teams.

What’s next? TESTING!

QA & Testing: Building Confidence vs. Crossing Fingers

Shipping code without proper testing is like sailing a ship with a hole in the bottom. Ninja, for example, invests heavily in Quality Assurance (QA) and testing throughout the development lifecycle. It’s about much more than just seeing if the code runs and the feature works.

| Testing Aspect | ✅ Thorough Testing Approach | ❌ Surface Level Testing Approach |

|---|---|---|

| 🧑🔬 Role & Mindset | Dedicated QA Role/Team: Trained testers with a “how can I break this?” quality-focused mindset. Trust me, QA make a game out of breaking things! | Developer Tests Own Code: Inherent bias, “make it work” focus. Or no testing at all. |

| 🔬 Scope & Types | Comprehensive Coverage: Unit, Integration, End to End, API, Exploratory, Security, Performance, Usability, Accessibility tests. | “Happy Path” Focused: Checks basic functionality works as intended. Ignores edge cases & non-functional aspects. |

| 📝 Process/Planning | Structured: Formal Test Plans, detailed Test Cases, traceability to requirements, clear bug tracking. | Ad-hoc & Informal: Maybe a quick mental checklist, often unstructured “poking around.” |

| 🤖 Test Automation | Extensive & Integrated (CI/CD): High % of tests automated (Unit, API, Regression) for fast feedback. | Mostly Manual: Relies on repetitive manual clicking. Automation is minimal, brittle, or non-existent. |

| Testing Environments | Dedicated & Controlled: Separate QA/Staging environments mirroring production; managed test data. | Uncontrolled: Tests on local dev machine (“works for me!”), maybe directly in production (yikes!). |

| 🔄 Regression Testing | Systematic Safety Net: Automated suites run constantly to ensure new changes haven’t broken anything existing. | Hope-Driven: Assumes/hopes nothing else broke. Maybe some random spot checks. |

| 🐛 Bug Management | Formal & Tracked: Detailed bug reports, prioritization, assignment, and crucial verification of fixes. | Informal & Loose: Bugs mentioned in chat/email, might get lost, fixes often deployed unverified. |

| ⚡ Non-Functional | Deliberate Focus: Specific tests designed to check Performance, Security vulnerabilities, Accessibility, Usability. | Usually Ignored: Assumes because it works it’s “good enough” |

| 📊 Test Data | Realistic & Diverse: Uses data covering edge cases, different user types, boundary conditions, and realistic volumes. | Simple & Limited: Uses basic data that doesn’t expose potential issues lurking in edge cases or scale. |

| 🎯 Goal | Ensure Overall Quality: Validate functionality, reliability, security, performance, and user experience meets standards. | Confirm Basic Function: Check if the specific code added seems to work in isolation. |

Professional QA is a proactive, disciplined process embedded throughout development to mitigate risk and ensure high-quality outcomes. It’s not simple to do, and it’s hard to get right but it’s a job that professional development teams take seriously.

It all takes time and effort to do properly

My goal here is not to disparage potential quick paths to success (there’s absolutely a place for that) but more to showcase the effort and time spent at an enterprise development level. You can see through the above lifecycle of adding a new feature what is actually involved - consider that this is a relatively simple feature in comparison to others too. Ultimately, it takes time, patience and effort to deliver a professional, enterprise grade feature. PM/Design/Engineering/QA all inherently collaborate with meetings, handoffs, reviews, etc., and this also takes time to do properly. I have purposely left out large sections of other effort too (things like Deployment, Marketing, Documentation, Internal Enablement (Support/Sales), etc.).

Think of it like this: anyone can stack some bricks, but it takes architects, engineers, specialized crews, and rigorous inspections to build a skyscraper that withstands hurricanes. Enterprise software (at least some of) is that skyscraper. The meticulous planning, design, engineering, and testing might seem slow compared to stacking bricks, but it’s what ensures the final product is strong, safe, accessible, and serves its purpose reliably for everyone inside.

There are definitely ways to speed this up in Enterprise development, some I haven’t touched on, but there’s no such thing as a quick change when you’re building enterprise software, because every change, no matter how small it seems, ripples through a complex system demanding careful consideration of design, security, testing, and global user impact. I fundamentally believe this is how you build good, quality products and features.

Start the conversation