It is no secret that ConnectWise has had a tough time recently. When you combine sequential critical security vulnerabilities with a seemingly uncertain future for the Automate product, it’s going to start worrying some people. As a Managing Partner of MSPGeek, I have what I feel is a distinct insight into the thought processes and decision making of many MSP partners at once. ConnectWise gets a lot of stick, especially in public places like Reddit. Usually, it’s just hot air or venting. That seems to have changed in the past month and partners seem to be starting to take affirmative action and actually moving or investigating alternate RMMs.

All RMMs are lacking

Personally, I don’t think any of the mainstream RMMs are ticking the right boxes at the moment. There’s a common joke saying that I often hear when it comes to the current selection of RMMs; “Pick which one you hate the least”. None of them seem to be adapting or innovating to serve the needs that have surfaced over the past five years. Things like 365 integration/monitoring, security and compliance options, Intune integration, Azure integration, auto-documentation, cloud identity management, even modern-day patch management are either extremely weak or none existent in all the products I’ve looked at. In ConnectWise Automate’s case, nearly all of that is missing. It’s not adapting to the needs of our MSP, so I have to put custom solutions in place. The Automate agent feels like it is stuck in time, perpetually living in 2005 with its delayed communication mechanisms and its ancient .NET codebase. The purpose of this post is not to ConnectWise bash, but it started off an interesting thought exercise for me. As much as I love the customizability of ConnectWise, I am not doing my job properly as CTO of my MSP if I am not at least evaluating our position. Which begs the question; how hard would it be to move to a different RMM?

How to give yourself an existential crisis

After thinking about it for 15 seconds, I curled up in a corner and cried for 15 minutes. For me at least, it would be an extraordinary amount of effort. Hundreds of scripts, at least fifty production reports, customized third-party and OS patching, custom integrated 365 and security monitoring and a whole lot more. It would be a huge project, one likely to impact on the bottom line and future of my business. Time spent migrating RMMs is time not spent on improving and innovating my actual MSP and the solutions we provide to clients. It’s a problem that has to be approached delicately, and slowly. Which brings me to the title of this post – instead of putting in a huge chunk of effort in migrating from one RMM to another, that effort should be put into reducing the reliance on RMM specific features completely, like scripting and monitoring. Then it doesn’t matter what RMM you are on.

In rides PowerShell, on its Noble Steed

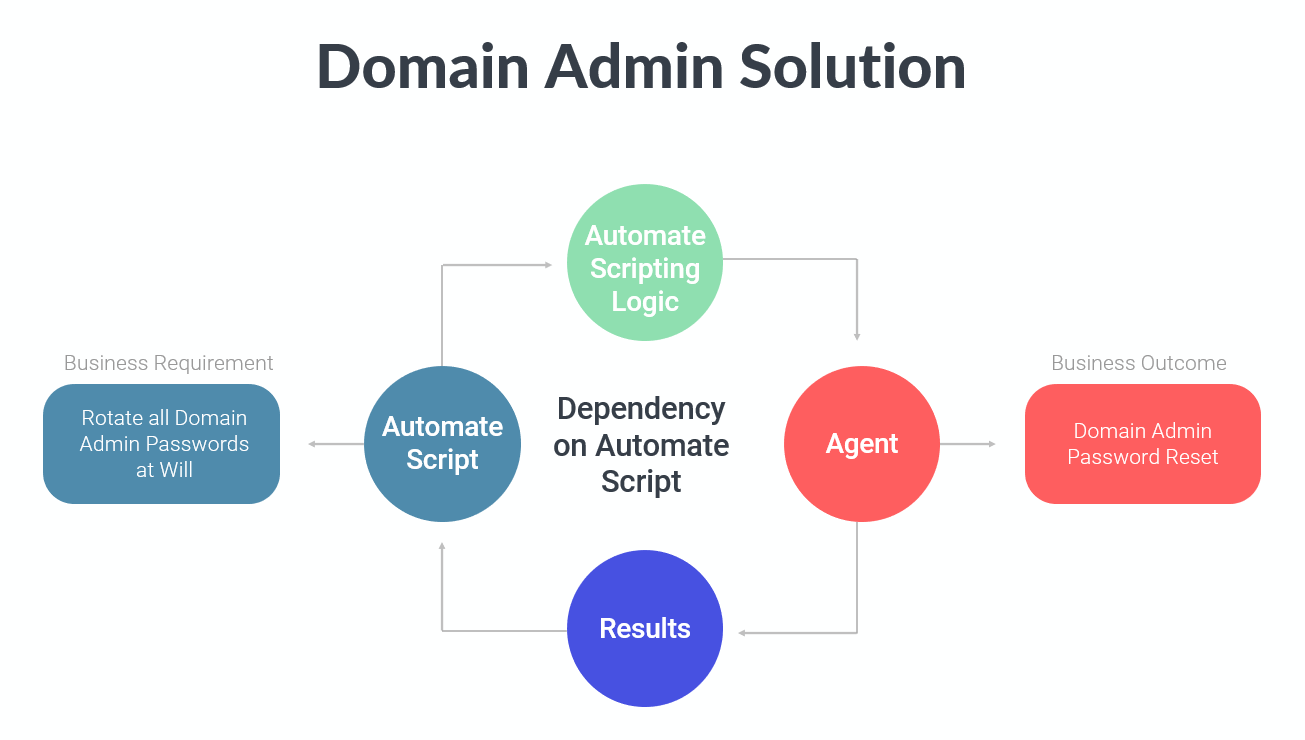

Simply put, I am going to start the long and arduous process of migrating monitors and scripts into a more generic format. Here is roughly how the process works at the moment, I’ve picked a typical Automation task to demonstrate the point:

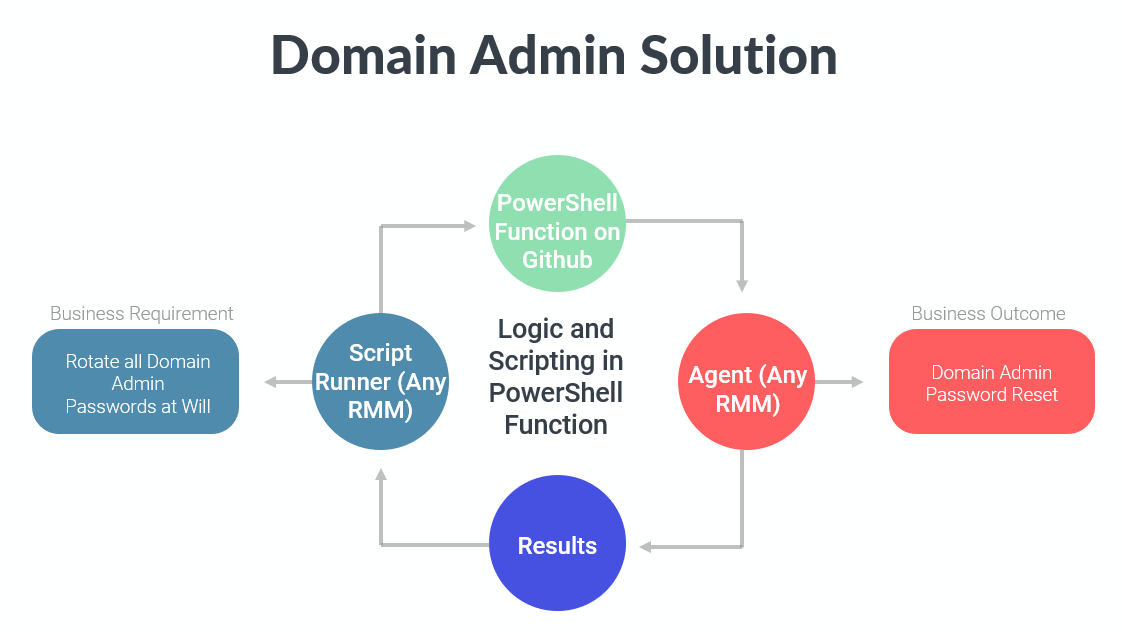

There’s a huge reliance in this model on what essentially is an Automate script. Generating a password, updating the password, checking the update was successful etc. All logic built into a platform-specific model. I can’t take that logic out of Automate with me and move it to another RMM. Or can I? I can if I move all of the scripting logic outside of the Automate script and into a PowerShell function that’s not even stored in the RMM. In this case, the RMM becomes a glorified script runner and a parser of results:

Let me explain a bit more about how this works. Firstly, we ignore the RMM completely and we write a PowerShell function to do the job. That function could look a bit like this (this is not designed to be an exhaustive example, but more to represent a structure):

function Set-ICDomainAdminPassword {

<#

.SYNOPSIS

Resets a Domain Admin Password

.DESCRIPTION

Checks the account is a Domain Admin, generates a secure password if needed and resets the password

.PARAMETER UserName

The Domain Admin's Username

.PARAMETER Password

The password to set which is optional

.OUTPUTS

String: "Password reset successful" or "Password reset failed with $Exception"

.NOTES

Version: 1.0

Author: Gavin Stone

Creation Date: 2020-07-27

Purpose/Change: Initial script development

.EXAMPLE

Set-ICDomainAdminPassword -UserName ICAdmin -Password littleBOBBYtables

.EXAMPLE

Set-ICDomainAdminPassword -UserName ICAdmin

#>

param (

[Parameter(Mandatory = $true)]

[string]$UserName,

[Parameter(Mandatory = $false)]

[string]$Password

)

# Logic to check the Domain Admin account exists, and is a Domain Admin

# If the $Password has not been sent, generate one

# Attempt to reset the password, capture and check the result

Write-Output $FinalResult

}We test that script works correctly and as expected outside of the RMM, then we upload it into a private repository on GitHub. Instead of now putting all the logic inside an RMM script, we do only one thing in the RMM script – we call the function directly from GitHub along with the parameters we want to use:

$wc = New-Object System.Net.WebClient

$wc.Headers.Add('Authorization','token YOURGITHUBAPITOKEN')

$wc.Headers.Add('Accept','application/vnd.github.v3.raw')

$wc.DownloadString('https://raw.githubusercontent.com/the/url/to/your.ps1') | iex

Set-ICDomainAdminPassword -UserName ICAdminThat’s it – you’ve successfully abstracted your business logic out of your RMM. That’s 90% of the job. If you’re not 100% sold on having the script in GitHub, simply put the PowerShell function inside the RMM script and call it at the end. You could even host the ps1 on your own Automate server and call it in the same way, just change the URL string.

The benefits of having your business logic in GitHub

- You can utilize proper source control for your scripts, maintaining structured, audited changes for multiple people

- It’s entirely RMM independent. If your RMM can run PowerShell, you can use this method

- If you do move RMM, you have one thing to move per script with the benefit of knowing it will just work in any other RMM

- You can write logic that works on Linux and Macs too with PowerShell core

- Business logic is a lot easier to read as you can load scripts in an editor that actually does a good job of displaying code (I use VSCode)

- The scripts are a lot easier to test

- The scripts are a lot easier to write

- You can leverage technologies like InTune to run PowerShell scripts too, further removing RMM reliance

- Your business logic is in one easy to access repository. It’s immediately obvious what custom things you have in place!

You can do the same with Monitors too

In exactly the same way, you can abstract your monitoring too. This can be anything from a Registry check to a service check to anything you can imagine. If you’re in Automate, this needs to be a single line script:

"%windir%\System32\WindowsPowerShell\v1.0\powershell.exe" -noprofile -command "& {$wc = New-Object System.Net.WebClient;$wc.Headers.Add('Authorization','token YOURGITHUBTOKEN');$wc.Headers.Add('Accept','application/vnd.github.v3.raw');$wc.DownloadString('https://raw.githubusercontent.com/the/url/to/your/monitor.ps1') | iex;}"In the script, you simply Write-Output the results and then set the monitor conditions inside the RMM.

This is not bullet-proof

Though this works well for lots of different types of scripts, it doesn’t work as well for others. You may need to expand the script to more than one-line in the RMM depending on what the script is doing. You may need to generate a ticket, for example, and most of the time you’d need to do this inside the RMM script. That said, most of the scripts I have that I can’t move to PowerShell because they rely on Automate functions are scripts that are fixing or checking for functionality that shouldn’t be broken in the first place, so I wouldn’t need to migrate them to a new RMM. Your mileage may vary.

Some things to consider: GitHub Security

I achieve this securely by having an organization set up in GitHub that has a number of people in it, one is an account called ic-read-only. This is the account I generate the API token in. It has read-only limited access to the private repository with the business logic in. This is an important step! The last thing you want is someone on an Agent intercepting your API Key and having full access to edit the PowerShell that all your other agents are calling.

What if I can’t write PowerShell?

This is pretty much a skill that I would expect now from any competent Windows engineer. It’s never too late to learn. Start here – this is the only book that actively sits on my desk; PowerShell in a month of Lunches

Final thoughts

I don’t see myself moving from Automate for a while yet, but I at least feel by starting to do the above I am preparing for the future – whatever it may hold. I’d love to hear what others think about this approach, including ways it could be improved.

Absolutely agree. We also use psh more and more; it is a must as Automate has so many (monitoring) limitations.

Using Github as a repository is a great idea; much appreciated. Seems better than distributing scripts internally.

Thank you for this. Great insight and I’ve been meaning to explore and learn how to use Github.

I’ve been saying for at least a few months we have been working on using PowerShell and making our scripts more portable.

I haven’t put any specific to it yet, but we have been trying to at least make new scripts where we can in posh

I must say I wasn’t expecting this article from you but I 100% agree. Have the exact same perspective and my solution, too, was to utilize PowerShell to resolve. It is the only path forward at this time but if an RMM vendor wants to step up to the plate, produce a product that does what it should as well as increase productivity for the MSP by manufacturing scripts I shouldn’t have to well I would fully welcome that as well.

I hate having to leave ScreenConnect :/

I agree wholeheartedly.

The only question I have is where do you store the API key to authenticate with github? Ideally I’d want that centrally stored in Automate, so if it had to be revoked it can be updated without having to edit every single automate script…

Two steps in your automate-side script – put the PAT key in your Properties table, and pull it back to your automation with “SQL Get value”:

SELECT Value FROM `LabTech`.`properties` WHERE `Name` = ‘PATToken’

Then, your powershell script is called with a -PatToken “%sqlresult%’ parameter

param ($PatToken)

$wc = New-Object System.Net.WebClient

$wc.Headers.Add(‘Authorization’,’token $PatToken’)

$wc.Headers.Add(‘Accept’,’application/vnd.github.v3.raw’)

$wc.DownloadString(‘https://raw.githubusercontent.com/the/url/to/your.ps1’) | iex

Set-ICDomainAdminPassword -UserName ICAdmin

I am considering developing a framework for this that would work with azure functions, intune, and other MS based technologies. I feel like MS is the one world I am not escaping as an MSP and they seem to be getting more MSP friendly in their architecture. Not sure if anyone has tackled this already, but I think there is at least some serious suplamental potenial anyway.

I completely agree. I’ve started with Automate 3 years ago and 100% of custom scripts use PowerShell for the logic.

[…] in the future. This is why I’ve been more focussing on Power Bi. If you read my post on phasing out RMM specific scripts you’ll know that I have decided to start decentralising our reliance on individual components […]

Easier said than done. Most of our scripts perform additional tasks on the RMM side. They execute queries to pull data from CW Automate DB as well as write data into the DB. The ticketing system integration that you mentioned is only a small example. Some of our scripts are completely offline meaning that all the steps are just RMM side. In addition to scripts we also have over 20 custom CW Automate plugins that integrate with other platforms. We have plugins that place API calls to 365, Proofpoint, Webroot, SentinelOne, KnowBe4, Verizon, Dark web, Meraki, ITGlue, DUO, TextMagic … There are also remote plugins that sync data from the remote RMM agents. All the data is later used in the reports that are also developed in CW Automate. Choosing your RMM platform is a commitment and you can’t truly escape being dependent on that platform unless you stick to very basic features that the platform provides. In our case it would probably take few years to move everything away from CW Automate and we would then be stuck with another platform. The only alternative is to create you own platform, but it would require resources that none of the MSPs have. In my opinion you have to pick a platform that gives you the most of flexibility and then treat it as a framework. You need to be ready to develop your own tools that integrate with the platform you select. CW support is terrible, but I can’t think of any other RMM tool that gives you the same level of control.

This article was great and it made me start the process of migrating from Labtech scripts to remotely hosted PowerShell scripts.

I tried very hard for a long time to get GitHub working, but they recently made a change that makes this method of connection no longer possible, If anyone here has managed to get private distro connections working, could you please post instructions or a guide.

We had Powershell scripts with our previous RMM (datto RMM).

We migrated a few months ago to Automate, it was so much easier to just move everything we had working in the previous RMM.

As an improvement I use Try – Catch – Finally in all our Powershell Monitoring scripts, Finally is the section which returns the data to the RMM, All I had to change was the way Finally outputs the message to the RMM tool, without reworking the main logic of the scripts.

Somebody mentioned using Internal Monitors in Automate (based on SQL queries run on the Automate DB), – well I am staying as far away from those as I can for the following reasons:

1. They are RMM platform specific

2. Remote Monitors (powershell scripts run on Agents) are the quickest on reporting faults. Internal Monitors can take a longer time to report for a fault.

Hi TeaBaker,

How are you managing to output the message to the RMM tool (Automate)? I’d like to be able to create tickets if an issue occurred within the PS Script.

Thanks!